T-SQL Tuesday #113 -A Database for the Great Outdoors

This month’s T-SQL Tuesday comes from Todd Kleinhans (blog | twitter) who wants to know what we’re doing with databases outside of work.

I’m curious- outside of work and learning, what do you personally use databases for? Tracking books you have, recipes, collections, etc? While it can be said using databases for personal use could be either overkill or a hammer in search of nails on the other hand, it is exactly what they are for- storing data.

Getting Away from the Computer

I’ve been geocaching since 2001, less than two years after the US federal government shut off Selective Availability bringing accurate GPS receivers to the masses. If you aren’t familiar with geocaching, here’s the short version: people place containers of varying sizes out in the world, then post the location online. Others grab their handheld GPS (nowadays, more often it’s a smartphone) head to the location, search for the container, and sign a logbook stashed inside. But it’s not just a simple “X marks the spot” deal. These things are often hidden very well, but in plain sight. You haven’t lived until you’ve spent 45 minutes groping a pine tree looking for the one fake pinecone.

Part of the fun for me is the numbers and information you can mine out of the activity. Yes, I am quantifying my fun. Deal with it. Examples:

- I have found nearly 2200 geocaches scattered across 7 countries, including 13 US states

- I have recorded at least one find on every calendar day of the year

- If one were to travel to each cache I’ve found in sequence, the total distance would be nearly 64,000 miles

- I once traveled 2160 miles between two caches on the same day (I found one en route to the airport before PASS Summit 2012, and then one in Seattle after I landed)

- The geographic center of all my recorded finds is only about 4 miles from my home (which is unusual)

Where the Data Lives

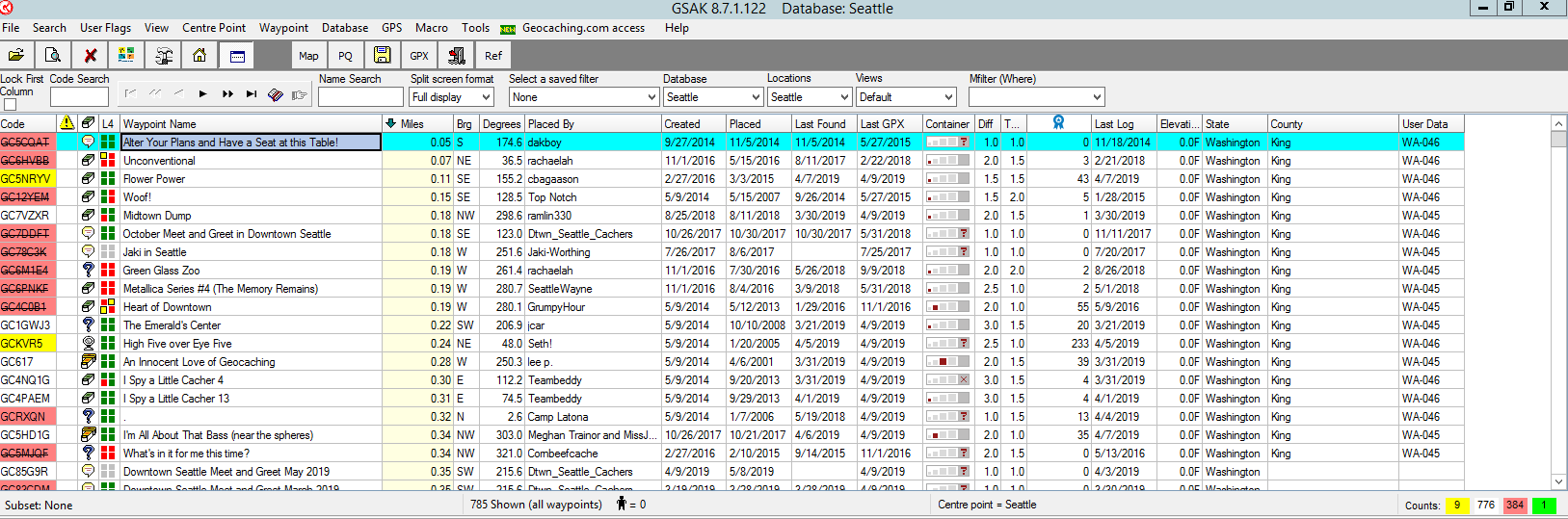

Despite the various changes and enhancements made to geocaching.com over the years, I continue to use a piece of desktop software to keep track of caches including extra waypoints and solved coordinates for puzzles. That program is called GSAK (Geocaching Swiss Army Knife) and (here’s why it’s relevant to this T-SQL Tuesday) it’s using several SQLite databases under the hood. This all works okay but things start to bog down once you’ve got 40,000 caches and over a million log entries in a database (my main database is close to 900MB).

The main GSAK window

For a moment, I considered writing my own queries directly against the database(s) to extract more data from them. As I dug into the database schema, however, I saw a number of things that I would have done differently, so I pivoted a bit and (sorry, bending the rules here) took it as a learning opportunity. The goal being material for blog posts and maybe even a SQL Saturday talk or two.

Making Work for Myself

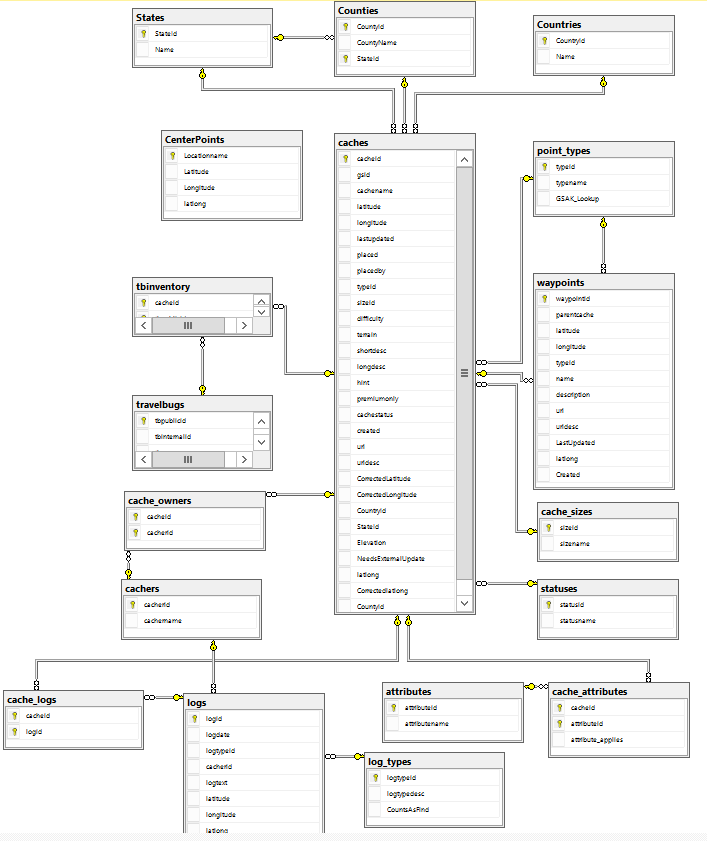

First, I extracted the schema from SQLite, looked it over, then created my own schema in SQL Server that was a bit more spread out.

My own geocaching database schema

Next, I tried creating a PowerShell module to import data directly from the XML files that geocaching.com makes available to paying members. I haven’t touched this code in several years and with quite a bit more experience under my belt, I’m cringing at the idea of even looking at my old code.

But just importing new data wouldn’t be enough; I needed a way to get thousands of records imported from SQLite into SQL Server. I have a lot of history in there, and data is changing daily. So I started working on a T-SQL driven ETL process to pull the data in. Part of that process turned into an early blog post of mine pertaining to creating linked servers from SQL Server to SQLite. That ETL code also hasn’t been touched in a long time; it’s in the link above, however. There are a number of issues with it that I just haven’t gotten around to working out.

So for now, my SQL Server geocache database is in a low-grade maintenance mode. Caches that I imported long ago are getting updated (mostly marked as archived as they’re retired). But the SQLite databases are still going strong and getting updated on a regular basis. I’m in GSAK a few times a week looking for new puzzles to solve, or looking for caches in places I’ll be traveling soon.